- Feb. 9, 2021

- --

Building a Continuous Delivery Pipeline with GitHub and Helm to deploy Magnolia to Kubernetes

Containerizing an application and deploying it to Kubernetes via a continuous delivery (CD) pipeline simplifies its maintenance and makes updates faster and easier.

This article will guide you through the steps to containerize Magnolia using Docker (dockerizing) and set up a complete CD pipeline to a Kubernetes (K8s) cluster.

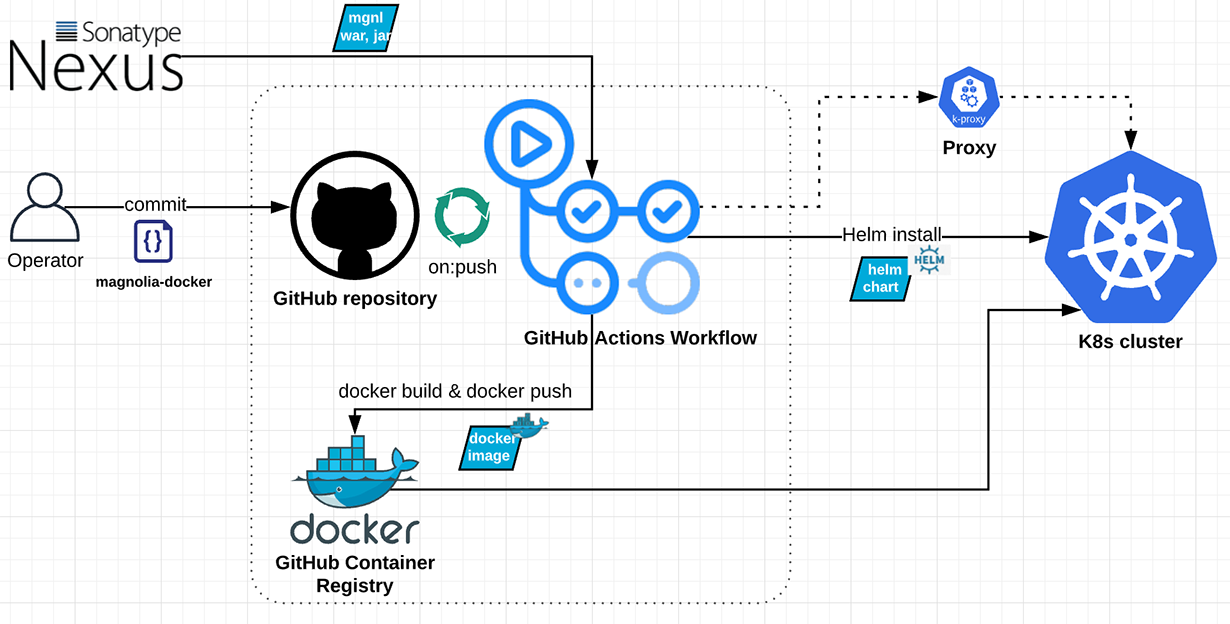

The pipeline will create a Magnolia bundle, build a Docker image, push it to the GitHub Container Registry, and deploy Helm Chart with the Docker image in Kubernetes. As a result, we will be running a containerized Magnolia release in a local Kubernetes cluster.

Key components

Below is a high-level flow chart of the CD pipeline containing the key components:

Kubernetes

Kubernetes (K8s) is an open-source container orchestration system that enables you to automate containerized application deployment, scaling, and management. It provides a common framework that enables you to run distributed systems, giving development teams consistent, unyielding infrastructure from development to production.

Helm Chart

Helm is a package manager for Kubernetes. It uses a packaging format called charts. Helm charts define, install and upgrade Kubernetes applications.

GitHub Actions

By automating your software development workflows, GitHub Actions allows you to easily set up continuous integration (CI) and continuous deployment (CD) directly in your repository–without the help of other CI/CD systems.

A workflow is an automated process that you set up in your GitHub repository. You can build, test, package, release, or deploy any GitHub project using a workflow. It consists of different tasks, called actions, that can be run automatically on certain events, for example, a pull request merge or a git push.

GitHub Container Registry

GitHub Container Registry enables you to host and manage Docker container images in your organization or a personal user account on GitHub. You can also configure who can manage and access packages using fine-grained permissions.

Prerequisites

To build the pipeline, we’ll install several command-line interface (CLI) tools, set up a local Kubernetes cluster and a GitHub Repository hosting our deployment files and pipeline.

minikube

minikube enables you to run a single-node Kubernetes cluster on a virtual machine (VM) on your personal computer. You can use it to try Kubernetes or for your daily development work.

If you already have a Kubernetes cluster that you can use, for example, AWS EKS or Google GKE, you can skip this step. Otherwise, please install minikube by following its documentation.

With minikube installed on your machine, you can start the cluster using a specific Kubernetes version:

$ minikube start --kubernetes-version=1.16.0

😄 minikube v1.6.2 on Darwin 10.14.5

✨ Automatically selected the 'virtualbox' driver (alternates: [])

🔥 Creating virtualbox VM (CPUs=2, Memory=2000MB, Disk=20000MB) ...

🐳 Preparing Kubernetes v1.16.0 on Docker '19.03.5' ...

💾 Downloading kubeadm v1.16.0

💾 Downloading kubelet v1.16.0

🚜 Pulling images ...

🚀 Launching Kubernetes ...

⌛ Waiting for cluster to come online ...

🏄 Done! kubectl is now configured to use "minikube"

kubectl

kubectl is a Kubernetes command-line tool to run commands against a Kubernetes cluster. It can be used to deploy applications, inspect and manage cluster resources, and view logs.

Install kubectl and verify your minikube cluster:

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5644d7b6d9-nq48z 1/1 Running 1 3h27m

coredns-5644d7b6d9-sqgrb 1/1 Running 1 3h27m

etcd-minikube 1/1 Running 1 3h26m

kube-addon-manager-minikube 1/1 Running 1 3h26m

kube-apiserver-minikube 1/1 Running 1 3h26m

kube-controller-manager-minikube 1/1 Running 2 3h26m

kube-proxy-zw787 1/1 Running 1 3h27m

kube-scheduler-minikube 1/1 Running 2 3h26m

storage-provisioner 1/1 Running 1 3h27m

As our Kubernetes cluster is running inside a VM, we’ll also use kubectl proxy to proxy from your localhost address to the Kubernetes API server inside your VM.

$ kubectl proxy --port=8080 --accept-hosts='.*\.ngrok.io

$' &

[1] 30147

$ Starting to serve on 127.0.0.1:8080

ngrok

As we will later access the Kubernetes cluster from GitHub Actions, we’ll need a public proxy that routes requests to your Kubernetes API server. You can use ngrok, a free tunneling system, to expose your local services externally.

It only takes a few minutes to register for an account and set up a tunnel to localhost. To connect your local services with ngrok please follow the documentation.

$ ngrok http http://127.0.0.1:8080

ngrok by @inconshreveable (Ctrl+C to quit)

Session Status online

Account Do Hoang Khiem (Plan: Free)

Version 2.3.35

Region United States (us)

Web Interface http://127.0.0.1:4040

Forwarding http://66ab386be8b6.ngrok.io -> http://127.0.0.1:8080

Forwarding https://66ab386be8b6.ngrok.io -> http://127.0.0.1:8080

Connections ttl opn rt1 rt5 p50 p90

295 0 0.00 0.00 8.84 46.03

Helm

Helm is an essential tool for working with Kubernetes. It isn’t needed to complete this deployment, but it’s still good to have on your local machine to verify and validate your deployment in the end.

You can install the Helm 3 package manager by following the documentation.

Check the Helm version after installation.

$ helm version

version.BuildInfo{Version:"v3.2.4", GitCommit:"0ad800ef43d3b826f31a5ad8dfbb4fe05d143688", GitTreeState:"dirty", GoVersion:"go1.14.3"}

GitHub Repository

You will need to set up a GitHub Repository, GitHub Container Registry and Personal Access Token.

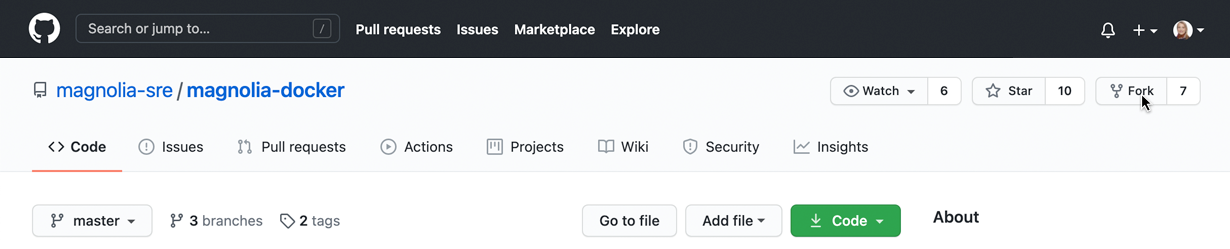

1. Fork magnolia-sre/magnolia-docker

2. Enable the GitHub Container Registry

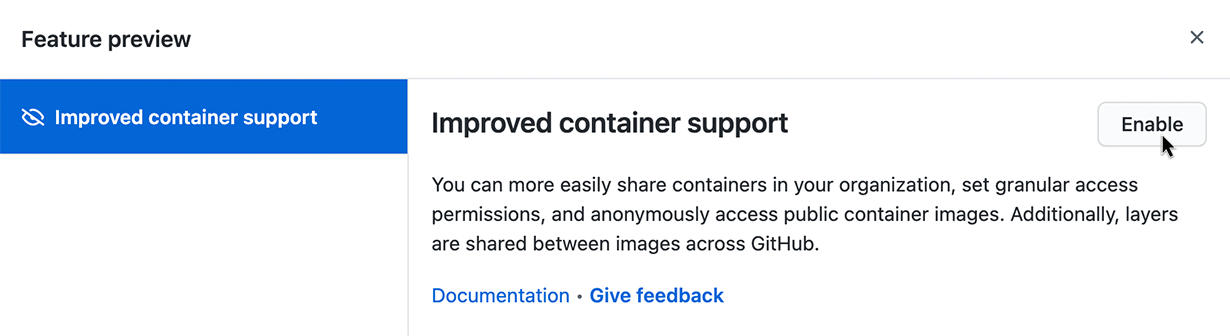

To use the GitHub Container Registry you have to enable improved container support. Navigate to “Feature preview” in the top-right menu underneath your account profile, then enable improved container support.

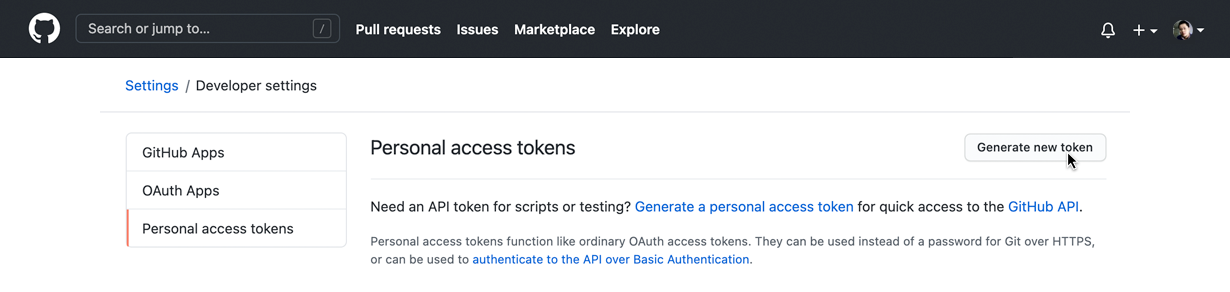

3. Generate a GitHub personal access token

We have to use a GitHub Personal Access Token for pushing Docker images to the GitHub Container Registry. You can generate a new token via Settings → Developer settings → Personal access tokens. Click on “Generate new token”:

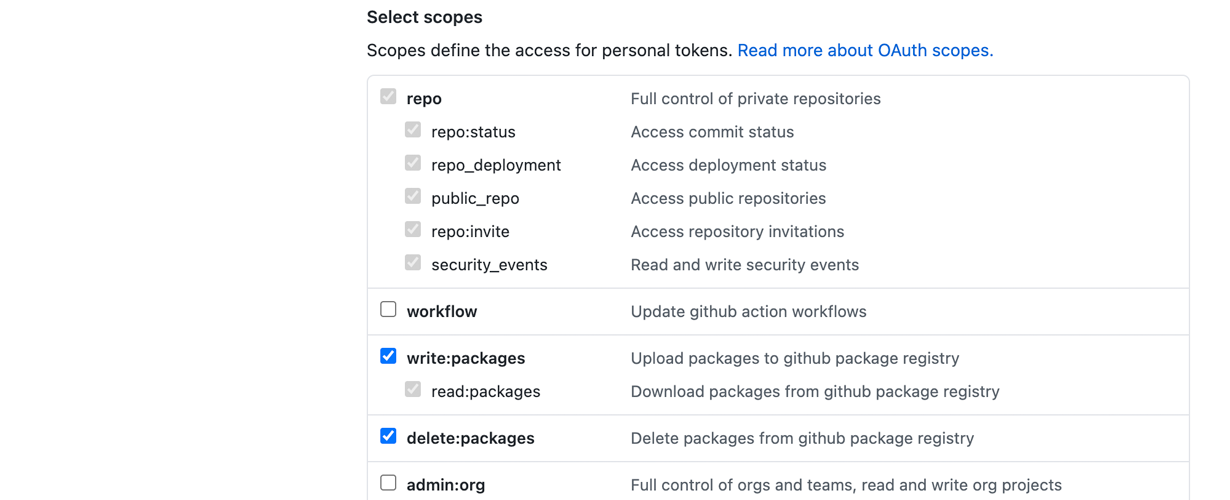

On the next screen, select at least the write:packages and delete:packages scopes:

Once created, copy the token, as it won’t be visible again.

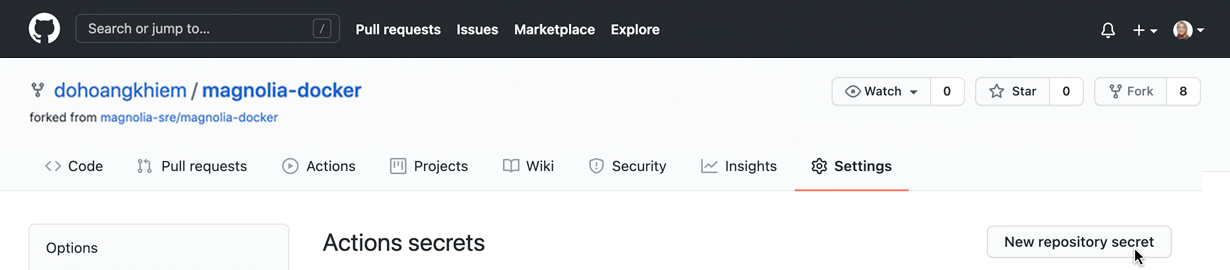

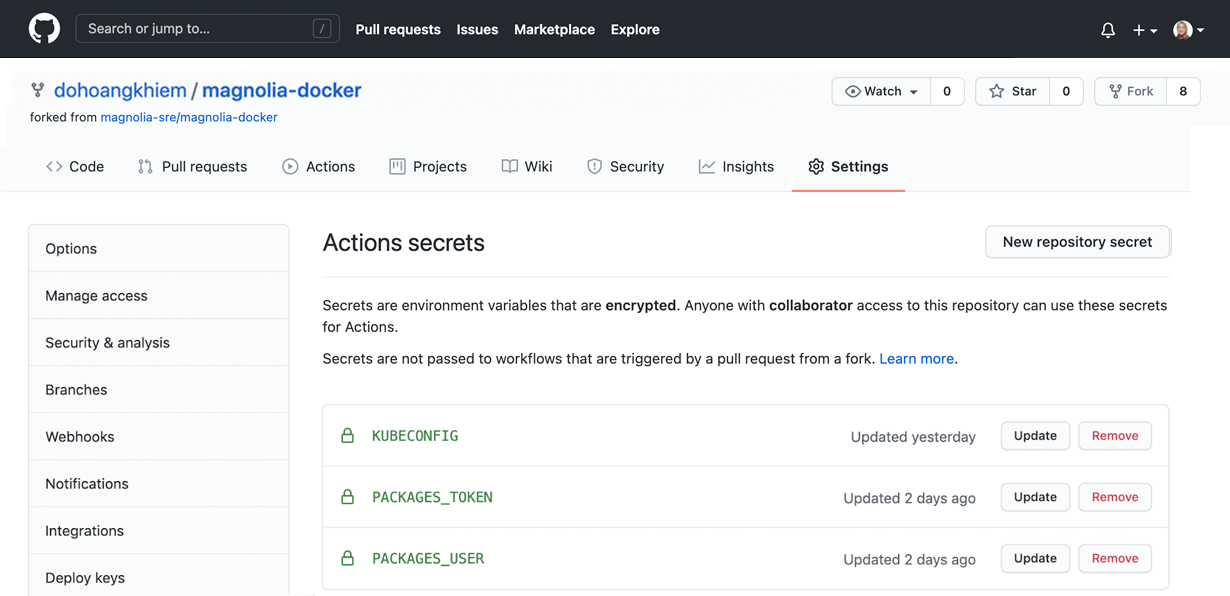

4. Configure Secrets in your magnolia-docker repository

Go back to your repository and configure your Secrets under Settings → Secrets:

Create a Secret for each of the following key-value pairs:

PACKAGE_USER: Your GitHub username

PACKAGE_TOKEN: Your personal access token

KUBECONFIG: Your Kubernetes configuration for accessing your cluster

If you’re accessing the cluster from your local machine you can find the config file at ~/.kube/config, or you can use kubectl config view to view the current config.

If you see an attribute such as certificate-authority, client-certificate, or client-key in the config file which specifies a path to a .crt or .key file, you have to replace it with certificate-authority-data, client-certificate-data, client-key-data respectively, and replace the file path with the base64-encoded value of the .crt or .key content.

For example, to get the base64-encoded value of certificate-authority-data:

cat ~/.minikube/ca.crt | base64

Below is an example of KUBECONFIG (cert and key base64 are truncated). The ngrok endpoint is used for the cluster parameter.

For this demonstration, we can use insecure-skip-tls-verify:true to ignore untrusted certificates:

apiVersion: v1

clusters:

- cluster:

server: https://66ab386be8b6.ngrok.io

insecure-skip-tls-verify: true

name: minikube

contexts:

- context:

cluster: minikube

user: minikube

name: minikube

current-context: minikube

kind: Config

preferences: {}

users:

- name: minikube

user:

client-certificate-data: LS0tLS1CRUdJTi...

client-key-data: LS0tLS1CRUdJ...

Magnolia in a Can: Containerization with Magnolia

Learn how to deploy Magnolia as a Docker container based on best practices from our professional services team

Download nowMagnolia-Docker Image

In this example we use the magnolia-empty-webapp bundle from the Magnolia public releases on Nexus, along with the magnolia-rest-services module for liveness and readiness endpoints. Create the following POM in the webapp directory:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>info.magnolia.sre</groupId>

<artifactId>magnolia-docker-bundle-parent</artifactId>

<version>1.0-SNAPSHOT</version>

<relativePath>../pom.xml</relativePath>

</parent>

<artifactId>magnolia-docker-bundle-webapp</artifactId>

<name>Magnolia Docker Image Bundle Webapp</name>

<packaging>war</packaging>

<dependencies>

<dependency>

<groupId>info.magnolia</groupId>

<artifactId>magnolia-empty-webapp</artifactId>

<type>war</type>

</dependency>

<dependency>

<groupId>info.magnolia</groupId>

<artifactId>magnolia-empty-webapp</artifactId>

<type>pom</type>

</dependency>

<!-- Contains the liveness/readiness end point: .rest/status -->

<dependency>

<groupId>info.magnolia.rest</groupId>

<artifactId>magnolia-rest-services</artifactId>

</dependency>

</dependencies>

<build>

<finalName>ROOT</finalName>

<plugins>

<plugin>

<groupId>io.fabric8</groupId>

<artifactId>docker-maven-plugin</artifactId>

<configuration>

<skip>true</skip>

</configuration>

</plugin>

</plugins>

</build>

</project>

You can test building a Magnolia bundle locally by running this command from the root directory:

$ mvn package -B -U

The bundle will then be used to build a Docker image based on the Tomcat 9 with OpenJDK 11 slim image. This is the Dockerfile which is located under src/main/docker:

# ----------------------------------------------------------

# Magnolia Docker Image

# ----------------------------------------------------------

#

FROM tomcat:9.0.38-jdk11-openjdk-slim

MAINTAINER sre@magnolia-cms.com

ENV JAVA_OPTS="-Dmagnolia.home=/opt/magnolia -Dmagnolia.resources.dir=/opt/magnolia -Dmagnolia.update.auto=true -Dmagnolia.author.key.location=/opt/magnolia/activation-key/magnolia-activation-keypair.properties"

# Copy Tomcat setenv.sh

COPY src/main/docker/tomcat-setenv.sh $CATALINA_HOME/bin/setenv.sh

RUN chmod +x $CATALINA_HOME/bin/setenv.sh

# This directory is used for Magnolia property "magnolia.home"

RUN mkdir /opt/magnolia

RUN chmod 755 /opt/magnolia

RUN rm -rf $CATALINA_HOME/webapps/ROOT

COPY webapp/target/ROOT.war $CATALINA_HOME/webapps/ You can test building a Docker image locally from the root directory of the project:

$ mvn -B docker:build

Magnolia Helm Chart

Magnolia Helm Chart is located in the helm-chart directory. Below is the structure of the chart:

.

├── Chart.yaml

├── templates

│ ├── configmap.yaml

│ ├── _helpers.tpl

│ ├── ingress.yaml

│ ├── service.yaml

│ ├── statefulset.yaml

│ └── tests

│ └── test-connection.yaml

└── values.yaml

2 directories, 8 files

Chart.yaml

This file defines chart parameters like name, description, type, version, and appVersion.

values.yaml

The file supplies the values to substitute in templates. We can define the image, tag, pullPolicy, service ports, ingress hostnames, resources limits, and custom app configurations like JAVA_OPTS.

templates/statefulset.yaml

This file defines the workload for the application. For this deployment, we use 2 StatefulSets, representing Magnolia author and public. StatefulSet allows us to assign persistent names–sticky identities–and maintain pods in a specific order. In the pod spec of StatefulSet we define containers, volumes, and probes.

template/service.yaml

This file defines the Services for the Magnolia author and public instances, providing load balancing and access to applications in underlying pods.

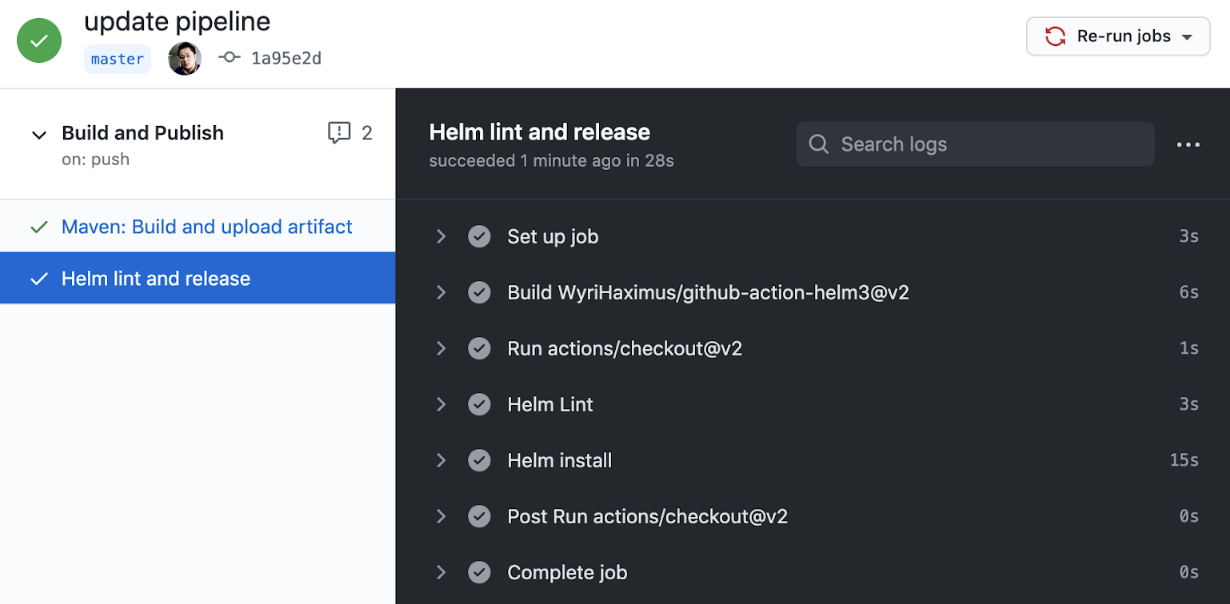

Execute GitHub Actions Workflow

Workflow Configuration

The GitHub Actions workflow is defined in .github/workflows/pipeline.yml. When triggered, GitHub Actions will automatically look for .yml or .yaml files in the .github/workflows directory.

name: Build and Publish

on:

push:

branches: [ master ]

jobs:

build:

name: 'Maven: Build and upload artifact'

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up JDK 11

uses: actions/setup-java@v1

with:

java-version: 11

- name: Build Magnolia bundle

run: mvn package -B -U

- name: Build Magnolia Docker Image

run: mvn -B -Dgithub-registry=${{github.repository_owner}} docker:build

- name: Push Magnolia Docker Image

run: mvn -B -Ddocker.username=${{secrets.PACKAGES_USER}} -Ddocker.password=${{secrets.PACKAGES_TOKEN}} -Dgithub-registry=${{github.repository_owner}} docker:push

helm-lint:

name: Helm lint and release

runs-on: ubuntu-latest

needs: build

steps:

- uses: actions/checkout@v2

- name: Helm Lint

uses: WyriHaximus/github-action-helm3@v2

with:

exec: helm lint ./helm-chart

- name: Helm install

uses: WyriHaximus/github-action-helm3@v2

with:

exec: helm upgrade --install github-magnolia-docker ./helm-chart

kubeconfig: '${{ secrets.KUBECONFIG }}'

At the top of the file, we define the event that triggers the workflow, for example, a push event to the master branch.

The file then configures 2 jobs that are executed on a GitHub-hosted runner, a pre-configured virtual environment, using the latest Ubuntu release “ubuntu-latest”.

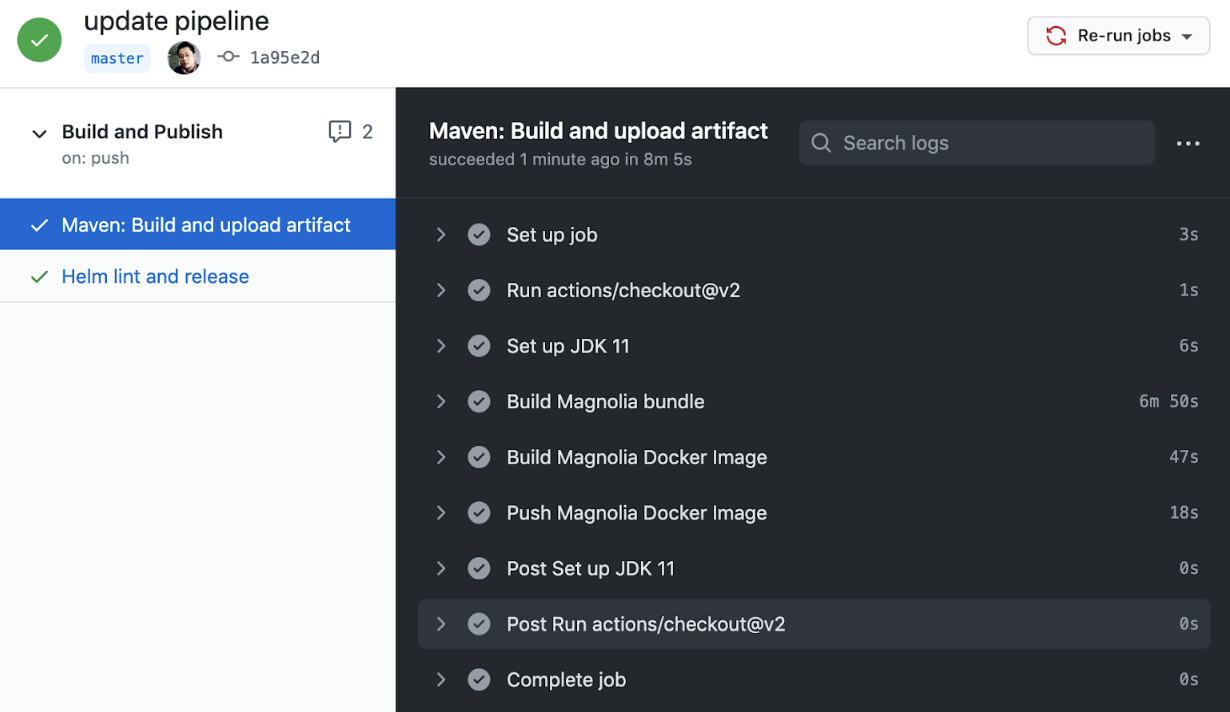

1. Maven: Build and upload artifacts

This job checks out the git repository to the runner, installs JDK 11, and builds the Magnolia bundle and Docker image. Finally, it pushes the image to the GitHub Container Registry.

2. Helm lint and release

This job executes helm lint to verify that the chart is well-formed, then installs the chart via Helm.

Trigger Workflow

The workflow is triggered by pushing a change to the master branch of the repository:

Verify Deployment

Now that the pipeline deployment is finished successfully, you can use Helm to verify the release in Kubernetes.

$ helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

github-magnolia-docker default 1 2020-11-18 07:42:32.240781604 +0000 UTC deployed magnolia-0.1.0 6.2.3 The output shows that we have deployed a github-magnolia-docker release in the cluster, using the magnolia-0.1.0 chart. The app version is 6.2.3.

To see more details about underlying objects we can use the kubectl command:

$ kubectl get statefulset

NAME READY AGE

github-magnolia-docker-author 1/1 21h

github-magnolia-docker-public 1/1 21h

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

github-magnolia-docker-author-0 1/1 Running 0 21h

github-magnolia-docker-public-0 1/1 Running 0 21h

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

github-magnolia-docker-author ClusterIP 10.110.231.225 <none> 80/TCP 21h

github-magnolia-docker-public ClusterIP 10.98.85.7 <none> 80/TCP 21h

You can use port forwarding to access your Magnolia services via localhost, for example, the Magnolia author service:

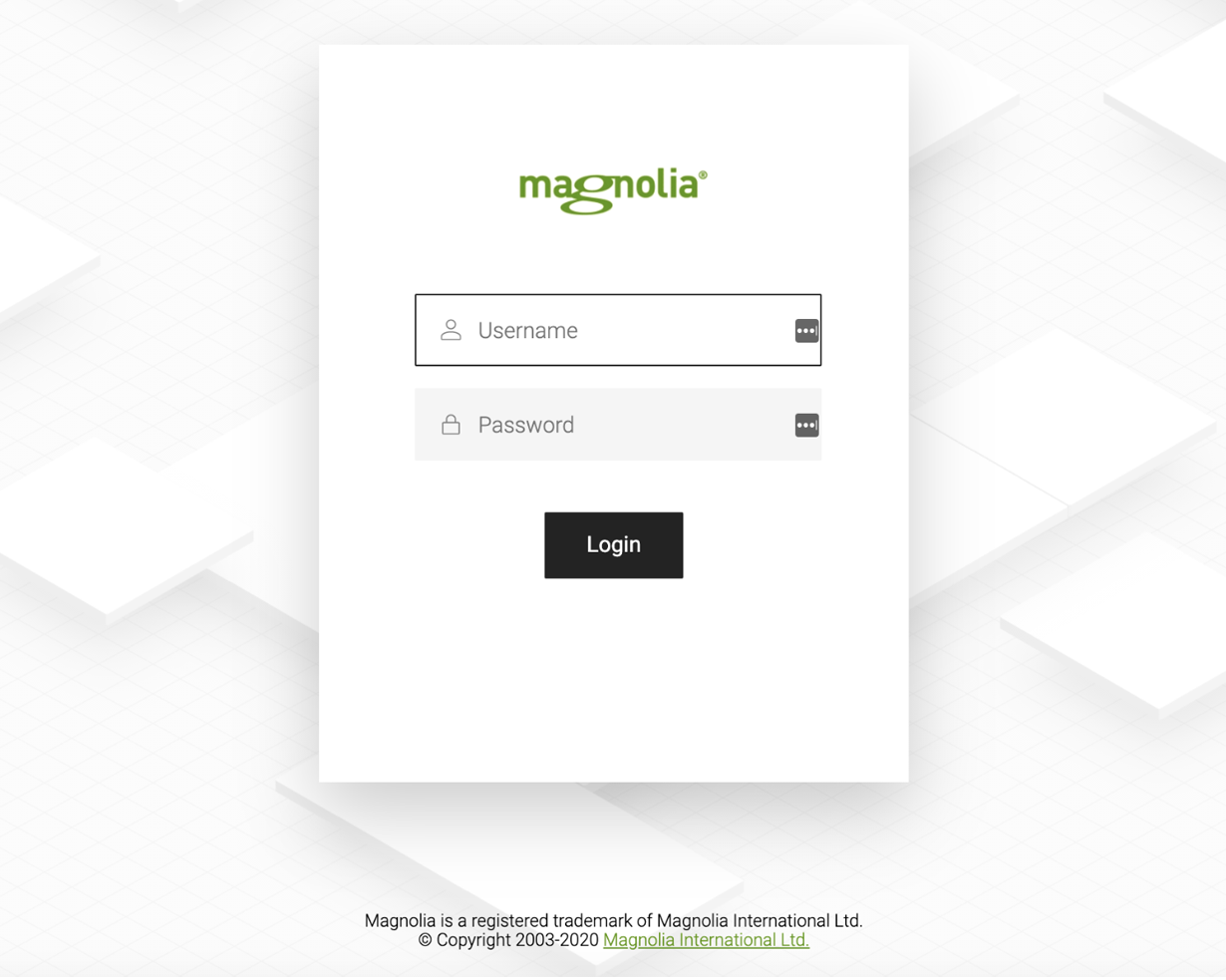

$ kubectl port-forward svc/github-magnolia-docker-author 8888:80 Open http://localhost:8888 in a browser and log in with superuser/superuser.

Taking Magnolia’s Containerization to the Next Level

Containerizing Magnolia is cool, isn’t it? But that’s not the whole story. You can make it part of a CI/CD workflow that seamlessly integrates with GitHub and your Kubernetes cluster. CI/CD is an essential aspect of DevOps culture and vital to get right.

GitHub Actions proves to be an efficient and easy-to-use tool to make things work in reality. From here you can add more actions to the pipeline, like test steps, updating the bundle descriptor, or service definitions in the Helm chart.

For updates and contributions, take a look at our GitHub repository.